The only way to mitigate the consistent and persistent “negative externalities” of Facebook and other social media platforms is through government intervention. Self-regulation has been a disaster for the public.

And given the phenomenal political power and financial heft of the tech/media platforms, the counter-force that government applies must heavy and multilateral.

The Prime Minister Scott Morrison is right to commit to putting the misbehaviour of these tech giants on the G20 agenda for its next meeting in Japan, but the better place to start this difficult process is in the Australian Parliament.

We get to decide the rules of behaviour in this country. And with the slow recognition of the dangerous business models of Facebook and the others – and the frankly disgusting way in which these companies deny any responsibility for the impact of their behaviour – it is time to act in our own self-interest.

To say that Australia should do nothing because we are small and have little influence is as vacuous as saying Australia should do nothing on climate change issues for the same reasons. The self-hatred of this view is crippling.

The shocking massacre in Christchurch is a catalyst for our small part of the world.

Regulatory intervention cannot just be about live-streaming. There are far deeper issues here that go to the heart of the Facebook business model, and the incentives that drive its officers.

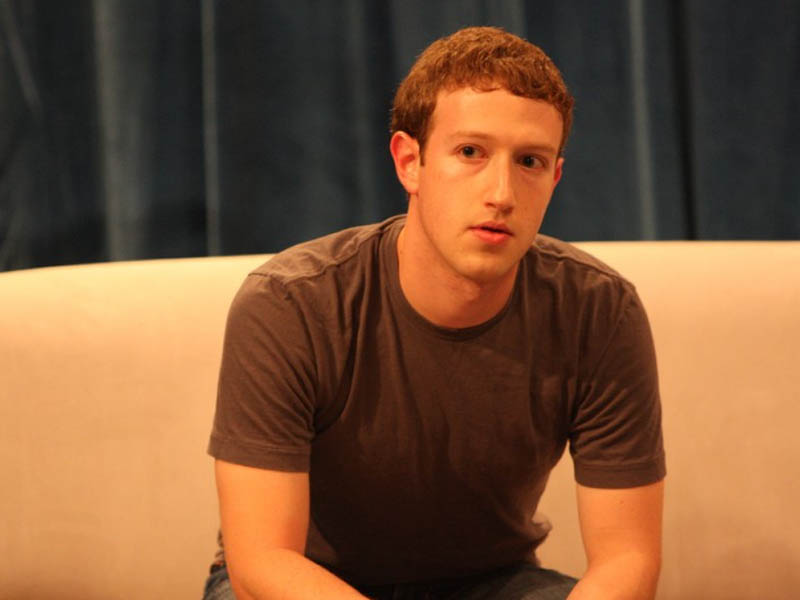

I don’t think anyone wants to hear Mark Zuckerberg say ‘we have to do better’ or ‘it’s not good enough’ again. However well-intentioned he might be, he has proven himself to be full of shit on self-regulation.

Facebook collects and share the data of individuals to influence the behaviour of those individuals, and to sell that influence to advertisers. It nudges the behaviour of its users through its very smart, very sophisticated ‘engagement’ strategies.

The filter bubbles created by social media platforms are dangerous to some people, and their impact is society wide.

If you are interested in the dangers posed by the misalignment of Facebook’s business model and the harm that it causes individuals and societies, Roger McNamee’s Zucked – Waking up to the Facebook Catastrophe is an excellent primer.

McNamee is an unlikely anti-Facebook activist. He is a long-time Silicon Valley venture capitalist, a former mentor to Mark Zuckerberg who helped get Sheryl Sanberg into the company, and who remains a shareholder (as one of the company’s earliest investors.)

“It is possible that the worst damage from Facebook and the other platforms is behind us, but that is not where the smart money will place its bet.”

“The most likely case is that the technology and business model of Facebook and others will continue to undermine democracy, public health, privacy and innovation until a countervailing power in the form of government intervention or user protests forces changes.

He says Mr Zuckerberg embraced invasive surveillance, the careless sharing of private data, and behaviour modification in pursuit of unprecedented scale.

Facebook’s success bred an overconfidence to the point where the company has been unable to recognise the damage it has caused.

The book describes how “even the best of ideas in the hands of people with the best of intentions can still go terribly wrong.”

“Imagine a stew of unregulated capitalism, additive technology and authoritarian values combined with Silicon Valley’s relentlessness and hubris unleashed on billions of unsuspecting users,” Mr McNamee writes.

“The day will come sooner than I could have imagined even a couple of years ago when the world will recognise that the value that the users receive from the Facebook-dominated, social media attention economy revolution, has masked an unmitigated disaster for our democracy, for public health, for personal privacy and for the economy.”

At its heart, the problems derived from the Facebook business model relate directly to the ‘filter bubbles’ its algorithms create. McNamee says the company has become very, very good at delivering a “customised Truman Show” for every single user.

“It starts out giving users what they want, but the algorithms are trained to nudge user attention in directions that Facebook wants,” Mr McNamee writes.

“The algorithms choose posts that are calculated to press emotional buttons, because scaring users or pissing them off increases time on site.

“When users pay attention, Facebook calls it engagement. But the goal is behaviour modification. That makes advertising more valuable. Facebook’s advertising business model depends on engagement, which can best be triggered through appeals to our most basic emotions.

“Joy also works – which is why puppy and cat videos and photos of babies are so popular – [but] not everyone reacts the same way to happy content. Some people get jealous, for example.

“Lizard brain emotions such as fear and anger produce a more uniform reaction and are more viral in a mass audience. When consumers are riled up, they consume and share more content.

“Dispassionate users have little value to Facebook, which does everything in its power to activate the lizard brain.””

Do you know more? Contact James Riley via Email.