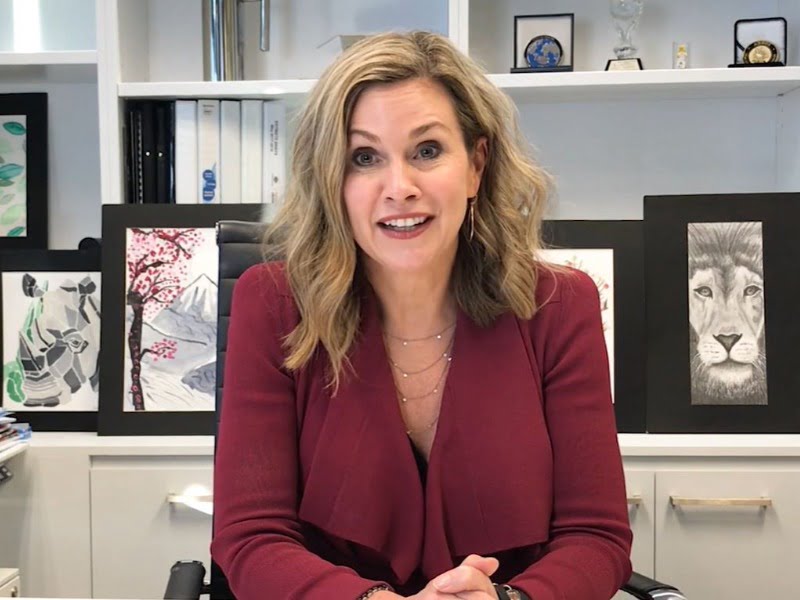

Australia’s eSafety Commissioner Julie Inman Grant has ordered the tech giants behind some of the biggest social media and messaging apps to detail what measures they are taking to tackle child exploitation material.

Legal notices were issued to Apple, Meta (and WhatsApp), Microsoft (and Skype), Snap and online chat website owner Omegle on Monday, with civil penalties of up to $555,000-a-day on the table if the companies fail to respond within 28 days.

The mechanism is one of three means available under the government’s Basic Online Safety Expectations (BOSE) to help ‘lift the hood’ on the online safety initiatives being pursed by social media, messaging, and gaming service providers.

Ms Inman Grant is concerned that as companies adopt end-to-end encryption messaging platforms and deploy livestreaming features, child exploitation material will be allowed to spread “unchecked”.

“Child sexual exploitation material that is reported now is just the tip of the iceberg – online sexual abuse that isn’t being detected and remediated continues to be a huge concern,” she said announcing the action on Tuesday.

According to an Australian Institute of Criminology report last month, Meta made 93 per cent of the 21.7 million child sexual abuse material reports to the US-based National Center for Missing and Exploited Children in 2020. The next largest reporter was Google with 546,704 reports.

In Australia, the majority of the 61,000 complaints about illegal and restricted content to Office of the eSafety Commissioner since 2015 have involved child sexual exploitation material, a trend that has only increased since the pandemic.

“We have seen a surge in reports about this horrific material since the start of the pandemic, as technology was weaponised to abuse children,” Ms Inman Grant said, adding that harm to victim-survivors occurs when platforms fail to detect and remove the content.

The legal notices ask service providers whether they are deploying any “technical tools” to identity child sexual exploitation content including in livestreaming and on end-to-end encrypted services, and what steps they are taking to prevent banned users from creating new accounts.

The companies have been selected for several reasons including the reach of their services, the number of complaints received by the eSafety Commissioner or their lack of public information on safety initiatives. Further notices are expected to be issued to additional provider in “due course”.

Ms Inman Grant said that while “proven tools” exist to stop this material, many tech companies “publish insufficient information about where or how these tools operate, and too often claim that certain safety measures are not technically feasible”.

“Industry must be upfront on the steps they are taking, so that we can get the full picture of online harms occurring and collectively focus on the real challenges before all of us. We all have a responsibility to keep children free from online exploitation and abuse,” she said.

The eSafety Commissioner is also in the process of drafting mandatory codes with industry that will require “providers of online products and services to do more to address the risk of harmful material”.

Ms Inman Grant said the next steps would involve public consultation on the draft mandatory codes, which will sit alongside BOSE and Online Safety Act with a view to creating an “umbrella of online protections for Australians of all ages”.

Do you know more? Contact James Riley via Email.