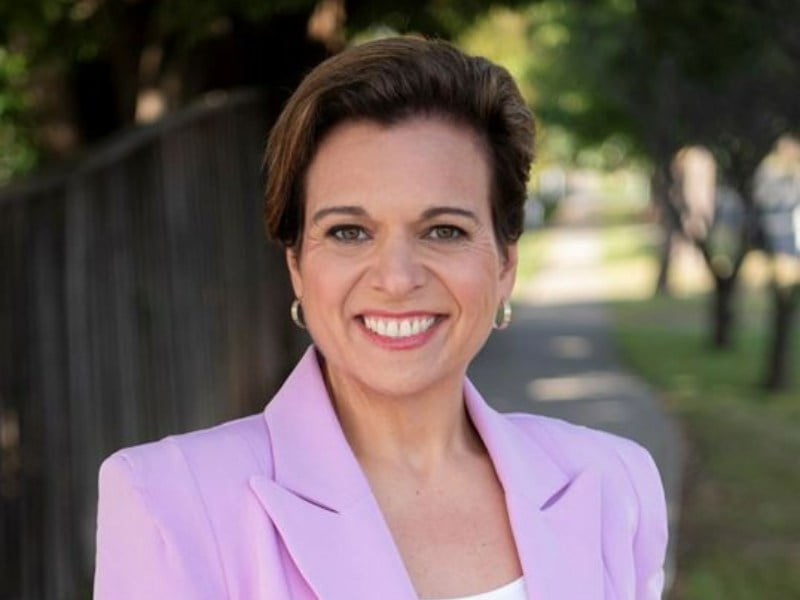

Communications minister Michelle Rowland has released draft legislation that would give the Australian Media and Communications Authority new powers to hold social media platforms and others accountable for harmful misinformation and disinformation on their digital platform.

Ms Rowland on Sunday opened a consultation period seeking industry and community feedback to a range of proposed new powers that enable ACMA to gather information from digital platforms and to require platforms to keep records on matters regarding misinformation and disinformation.

While the draft legislation also enables ACMA to register and enforce an industry-designed code of practice, it also gives ACMA to powers to create and enforce a mandatory code of practice where the industry-developed code is found to be ineffective in combatting misinformation and disinformation on digital platforms.

Under the proposed legislation, digital platform companies could face fines of up to $6.8 million for failing to address misinformation and disinformation on their platforms.

Ms Rowland said misinformation and disinformation can sow division in the community and undermines trust – and even threaten public health and safety.

“This consultation process gives industry and the public the opportunity to have their say on the proposed framework, which aims to strike the right balance between protection from harmful mis and disinformation online and freedom of speech,” she said.

The legislative draft focuses on systemic issues that pose a risk of harm on the platforms. It does not give ACMA the power to determine what is true or false on platform, nor does it give ACMA the power to remove individual content or posts.

Under the draft framework, digital platforms continue to be responsible for the content they host and promote to users. If platforms fail to act to combat misinformation and disinformation over time, the ACMA would be able to draw on its reserve powers to register enforceable industry codes – with significant penalties for non-compliance.

The code and standard-making powers will not apply to professional news content or authorised electoral content.

Ms Rowland said the proposed powers implement key recommendations from ACMA’s June 2021 report to government on the adequacy of digital platforms’ disinformation and news quality measures.

The proposed powers also build on – and are intended to strengthen and support – the voluntary code developed by the Digital Industry Group Inc – DIGI – an industry association.

DIGI managing director Sunita Bose said the association shares the government’s commitment to protecting Australians from harms related to misinformation and disinformation.

This had been demonstrated through DIGI’s work in developing The Australian Code of Practice on Disinformation and Misinformation (ACPDM).

“In principle, DIGI supports the granting of new powers to the ACMA that are broadly consistent with their previous recommendations, and we will be closely reviewing the legislation and look forward to participating in the public consultation,” Ms Bose said.

“DIGI is committed to driving improvements in the management of mis- and disinformation in Australia, demonstrated through our track record of work with signatory companies to develop and strengthen the industry code,” she said.

“We welcome reinforcement of DIGI’s efforts and the formalisation of our long-term working relationship with the ACMA, as sustained shifts in the fight against mis- and disinformation require a multi-stakeholder approach.”

The Code of Practice on Disinformation and Misinformation (ACPDM) has been adopted by eight signatories – Apple, Adobe, Google, Meta, Microsoft, Redbubble, TikTok and Twitter – each cementing their mandatory commitments, and nominated additional opt-in commitments, through public disclosures on the DIGI website.

The ACPDM was developed in response to policy announced in December 2019, in relation to the ACCC Digital Platforms Inquiry, where the digital industry was asked to develop a voluntary code of practice on disinformation.

DIGI developed the original code with assistance from the University of Technology Sydney’s Centre for Media Transition, and First Draft, a global organisation that specialises in helping societies overcome false and misleading information.

Do you know more? Contact James Riley via Email.