Defence and national intelligence agencies have been carved out of the first policy for responsible use of AI by government, which launched on Thursday to engender trust in the technology that Australians are slow to adopt.

In force from next month, the policy’s mandatory transparency, governance and risk assurance measures aim to position government as an exemplar to capture its own benefits and strengthen public trust.

Agencies will have to nominate accountable officials for AI, disclose new “high-risk” use cases to the Digital Transformation Agency, and publish annual statements on their approach to adoption and use.

Non-mandatory but strongly recommended measures include AI fundamentals training for all staff and additional training for public servants involved in the procurement, development or deployment of the technology.

The government’s own policy comes before economy wide guardrails are in place, with Industry minister Ed Husic yet to announce any movement on the work of experts he tapped six months ago to develop regulatory options.

Dozens of artificial intelligence projects were underway across federal government last year with little regard to governance frameworks and only limited whole-of-government advice.

A nation-wide framework laying out clear human rights expectations for the use of AI was released in June and interim guidance on generative AI has been in place for a year.

A wider Policy for the responsible use of AI in government was launched on Thursday by Finance minister Katy Gallagher and Mr Husic.

Senator Gallagher said the technology has the potential to lift government productivity and improve services.

“The ATO is already using AI to transcribe inbound calls to their call centre and detect patterns and trends in the topics raised by callers. An AI-enabled app by the Department for Agriculture, Fisheries and Forestry and the CSIRO is helping them to identify pests,” she said.

“These are just some of the ways that we see AI being able to help public servants to do their jobs.”

The new policy applies to non-corporate Commonwealth entities, but corporates are encouraged to apply it too.

From September 1, the agencies will have 90 days to nominate accountable officials and will be able to split responsibilities to various roles like chief information, data or technology officers.

The officials will be accountable for the implementation of the policy and will serve as a contact point for whole-of-government AI coordination. The officials will also have to notify the DTA where the agency has identified a new high-risk use case.

“This information will be used by the DTA to build visibility and inform the development of further risk mitigation approaches,” the policy states.

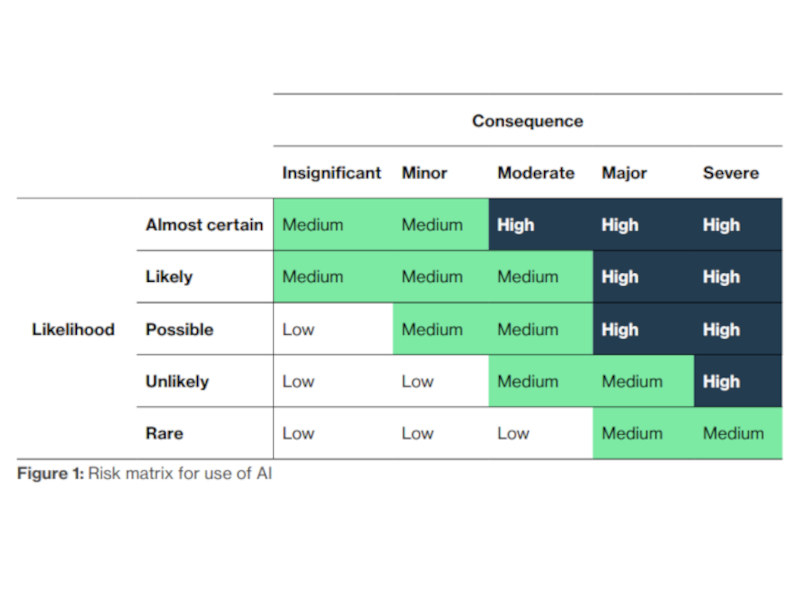

The policy relies on the OECD definition of AI and includes its own risk matrix to assist in assessing high-risk use, which spans from moderate consequences being almost certain to severe consequences being unlikely but not rare.

The policy does not apply to the use of AI in the defence portfolio or national intelligence community agencies, carving out dozens of entities, including many likely to be involved in high risk applications of the technology.

Options for economy-wide AI ‘guardrails’ that could include law reforms have been under development by AI experts brought in in February after initial consultations on AI regulation last year.

Mr Husic is yet to announce any new binding rules for AI beyond government but said the latest policy is part of the process.

“This policy is a first step in the journey to position the Australian government as a world leader in its safe and responsible use of AI,” Mr Husic said.

“It sits alongside whole-of-economy measures such as mandatory guardrails and voluntary industry safety measures.”

Do you know more? Contact James Riley via Email.